Newsletter 29

Peter Shaw (HPA, UK), Pascal Croüail (CEPN, France)

Themes and issues arising

The international scene-setting presentations clearly highlighted the very substantial radiation exposures associated with the medical sector – in terms of individual and collective doses, for both patients and staff. These are increasing significantly: the average per caput doses in some European countries from medical exposures is now thought to exceed that from natural sources, which could be regarded as something of a milestone in the evolution of radiation protection.

Of course, the benefits produced through medical exposures – both individually and collectively – are generally huge, and, from a global perspective, are also increasing as medical technology and procedures become more sophisticated and widespread. An increase in doses, set against an increase in benefits, does not, of course, mean that ALARA is not being achieved. Instead, it is the potential for, and costs of, dose reduction measures that need to be considered. The presentations highlighted a range of dose reduction measures in areas such as nuclear medicine and (especially) CT, many of which can substantially reduce doses at little or even no cost. On this basis alone, it must be concluded that ALARA is far from being achieved.

The dominance of CT doses in national dose statistics (accounting for up to 80% of the collective dose in some countries) was noted in several presentations; these doses have increased by up to a factor of 3 in 20 years. However, it would seem from a number of presentations that there is the potential to reverse this trend, through a combination of:

- Accurate referrals;

- Optimised equipment set up and operation;

- Optimising the image quality according to the clinical purpose or diagnostic needs of the examination (i.e. using an acceptable rather than the best achievable image quality); and

- Improving the education and training of medical personnel.

The very large scope for dose reduction raises questions about which factors help drive ALARA implementation, and what obstacles exist. The most obvious factor is the legal requirement to optimise exposures, which is driven by Regulatory Authorities and by the medical sector itself. With regard to the latter, there were many excellent examples of ALARA measures being identified and implemented by radiographers, radiologists and medical physics personnel. However, not all staff involved in medical exposures are equally committed to ALARA, and a repeated theme from the workshop was the need for multidisciplinary teams involving all relevant medical stakeholders (e.g. referrers, physicians and practitioners, nurses, technologists and medical physicists, manufacturers and maintenance engineers of medical radiation devices, etc.), supported by appropriate radiation protection training.

Regarding stakeholder representation, it is often difficult for medical staff to attend radiation protection events: not surprisingly, at this workshop only 30% of the participants were directly employed within the medical sector (compared to 40% from regulatory authorities). However, the Workshop highlighted the role of the professional medical societies, which can provide a more effective means of stakeholder involvement, and who are increasingly working together and forging new links through networks such as the European Medical ALARA Network (EMAN - www.eman-network.eu).

One group of stakeholders repeatedly discussed were equipment manufacturers and suppliers (who were not represented at all at the Workshop). It is clear that manufacturers play a large role in optimising doses: through the design of equipment; the modes of operation provided; and the training of users. Efforts are being made to engage with manufacturers and to encourage them to accept that they have responsibilities to enable and support the implementation of ALARA. The hope is to foster a culture of engagement and co-operation between manufacturers, regulators and users. If successful, this could be a very significant step forward.

Other factors that encourage ALARA were identified during the workshop, including the costs of providing medical exposures, the duties of the medical profession, the rights of the patient, and (increasingly) the impact of bad publicity (after radiation accidents or overexposures). Obstacles to ALARA include a lack of resources (both in the medical sector and in regulatory authorities), and a lack of ALARA culture in the sector in general. The question of ALARA culture was raised several times, and interestingly it was suggested that training alone cannot always guarantee the correct attitude and behaviour.

Occupational doses in the medical sector were discussed in several presentations. There are long-standing concerns about staff exposures from interventional radiology, and increasingly there are issues with nuclear medicine especially hand/finger and lens of the eye doses, which have the potential to exceed dose limits unless the principles of time, distance and (especially) shielding are effectively employed.

It is also possible that staff doses may be higher than records suggest. In some cases, basic precautions such as ensuring the right dosemeter is worn in the right place still remain an issue. On a more positive note, there is increasing interest in the use of electronic personal dosemeters. These can provide an insight into the causes of radiation exposure, and their value in ALARA implementation has already been demonstrated in other sectors. It seems unlikely that they will replace passive dosemeters in the medical sector, at least for the foreseeable future. However, they are already proving useful as a training tool, and for specific ALARA studies.

There were many other interesting presentations and discussions on subjects such as medical screening, individual health assessments, risk communication to patients, clinical audits, peer review and self assessment, which are not summarised here.

Workshop conclusions and recommendations

As mentioned above, the working group presentations, containing details of the discussions, conclusions and recommendations, are available at http://www.eu-alara.net/. A brief summary of these is given below.

Challenges in the optimisation of patient and staff radiation protection

- It is useful to consider a long-term "vision" for optimising medical exposures, for example:

- Avoid all inappropriate medical exposures

- No deterministic injuries to patients or staff

- In every case adopt a patient-centred (i.e. individual) approach to optimisation

NOTE: In addition to the above, the following aim was also suggested:

-

- "Every CT procedure to give an effective dose below 1 mSv"

This has caused much debate – at the Workshop, and subsequently in collating comments on these conclusions. It has had some support from equipment manufacturers, and is already achievable for some procedures. However, it overlooks the technical problems associated with measuring effective dose, and may not be reasonably achievable in all cases. In addition, it is unclear how this fits into an approach to optimisation based on DRLs. Nevertheless, it has prompted a debate on optimising CT procedures – something which is clearly needed. If only for this reason, it could be considered a useful (but very-long term) challenge.

- Equipment manufactures and suppliers should work with a multidisciplinary team at the hospital to determine optimum operating parameters, and to ensure that users are familiar with all the optimisation tools available.

- Intelligent software solutions should be developed:

- To help avoid inappropriate referrals, through reference to referral guidelines, clinical indications and patient exposure history tracking.

- To encourage and assist CT operators in delivering optimised dose procedures.

- Image quality is a key factor in the ALARA process: it is important to consider this as well as the doses received, and to understand the inter-relationship with DRLs. Image quality should also be optimised, i.e. of an acceptable quality, rather than the best quality achievable. There is also a link with the quality of the referral information – if this is good, a more informed decision on the required image quality can be made.

- It is important that the effectiveness of ALARA actions is assessed, i.e. through comparing the doses received before and after these actions. Information from research projects and organisations such as EURADOS and ORAMED should be disseminated to the medical sector through the professional societies.

- Access to integrated wide area RIS/PACS[1] solutions is encouraged. These can facilitate effective work flows, data sharing between medical professionals, and re-use of existing images, and can improve the quality and efficiency of care, including the optimisation of medical exposures.

Policies and tools for Implementing ALARA in the Medical Sector

- Clinical audits are considered a very important ALARA tool. They should address both justification and optimisation, and should be adopted across the EU. It is recommended that the European Commission consider a pilot project to undertake clinical audits in Member States that have not yet done so.

- The establishment of national referral criteria is a key element in implementing ALARA, and the European Commission should strongly encourage this in all Member States. Health Authorities should ensure that hospitals adopt these criteria via a multidisciplinary clinical approach to implementation.

- Diagnostic Reference Levels continue to be integral to the ALARA process, although it is important to remember that:

- They are not limits, but an upper boundary to optimisation, ie optimisation should be applied below DRLs.

- Doses exceeding DRLs are an indicator of poor practice.

- National circumstances should be taken into account when establishing DRLs, which should be based on common (rather than specialist) working practices. They should include DRLs for paediatric imaging techniques.

- DRLs must be periodically reviewed and updated to ensure they remain fit for purpose.

- The use of lower local DRLs than those established at the national level should be promoted and encouraged.

Education, training and communication to improve ALARA in the medical sector

- Education and training in radiation protection are essential to ALARA. These should be an integral part of the healthcare organisation's health and safety programme, and be subject to performance indicators to assess effectiveness. The clinical audits referred to above should also consider whether suitable staff training is provided.

- Radiation protection training should be provided for all staff involved in patient exposure, but should be targeted and tailored to ensure it is effective. Long and detailed training courses are expensive and difficult to arrange in a clinical environment, and there is evidence that shorter, more focused training packages have a greater impact.

- The purchase of new equipment should include provision for the initial training of users by the suppliers, and this training should be repeated (or refreshed) at appropriate intervals. The content of this training should be discussed and agreed with the site RPE and MPE, who should be encouraged to also actively participate in this training.

- Equipment suppliers must ensure that their own staff are also suitably trained, ie to be able to provide the required training and information to users, especially on appropriate dose reduction techniques. Persons engaged in the maintenance or repair of equipment should also have received training in radiation protection and understand the importance of the ALARA principle.

- Regulatory authorities should ensure that inspectors have a good understanding of the application of radiation protection in the hospital environment, through the provision of specific training if required. Inspections should include an evaluation of the radiation protection training programme for medical staff, which should consider experience and competence, as well as education and training qualifications.

- Although operational dose quantities are essential for quality assurance and optimisation, they are not an appropriate tool for communicating radiation risks to the patient. Even effective dose (which is a risk-based quantity), is not intended to determine the risk to a specific individual. It is suggested that a simple system of dose (or risk) bands be considered – which could be used for communicating the radiation risks to patients, and also to staff.

- Although DRLs are a useful means of identifying and communicating poor practices, they do not do the same in respect of good practices. Some countries have recommended using the 1st quartile of patient dose distributions, as a representing a "desirable dose value". It is recommended that the possible use of this concept as an optimisation tool be explored further.

Technical developments and quality assurance in the implementation of the ALARA principle

- The European Commission should consider establishing a European "platform" on the evaluation of new medical technology and equipment using ionising radiation. The purpose of this platform should be to:

- Develop suitable test equipment, measurement protocols and QA procedures, in collaboration with standardisation organisations.

- Exchange technical parameters on image quality and dose, performance characteristics, and pathology-specific and patient-specific protocols.

- Consider the establishment of a European Network for those involved in equipment evaluation and type-testing.

- The European Commission and National Authorities should strengthen the role of Medical Physicists in Radiology, and should encourage an increase in their numbers.

- Imaging and data recording systems system should contain tools to provide information for Quality Assurance. Manufacturers should be asked to include these in RIS, HIS[2] and PACS.

The Workshop agreed that it was important to follow-up and monitor progress on the extensive list of recommendations above. Thus it was agreed to ask EMAN, as a network focusing on the implementation of ALARA in the medical sector, to undertake this.

Next EAN Workshops

The 14th EAN Workshop, on "ALARA in Existing Exposure Situations", is planned for 4-6 of September 2012 in Dublin Ireland, and the 15th EAN Workshop on "ALARA Culture" is planned for May 2014 in Croatia. Details of both workshops will be announced on the EAN website.

[1] Radiology Information System (RIS). Picture Archiving and Communication System (PACS)

[2] Hospital Information System (HIS)

Ilona Barth, Arndt Rimpler (BfS, Germany)

Introduction

In nuclear medicine unsealed beta radiation sources are being used increasingly. They are used for diagnostics with positron emission tomography (PET) as well as for therapy of tumours by radioimmunotherapy (RIT) with labelled antibodies (e. g. 90Y Zevalin®) or by peptide receptor radiotherapy (PRRT) with 177Lu- or 90Y-labelled peptides (e.g. 177Lu- or 90Y-DOTATOC). 90Y-loaded microspheres serve as radiation sources for selective internal radiotherapy (SIRT). Beta emitters are also used for the treatment of inflamed joints by radiosynoviorthesis (RSO). Endovascular brachytherapy (IVBT), using a balloon catheter filled with Re-188 solution, is a promising method for the prophylaxis of restenosis in peripheral blood circulation after percutaneous transluminal angioplasty (PTA) treatments.

In Germany, the number of PET(/CT) examinations has continued to rise (2009: 25 123; +18 % compared to 2008) and the number of radionuclide therapies, in particular non-thyroid treatments, increased since the mid-nineties and stabilized at nearly 50 000 cases per year [1].

Vanhavere et al. [2] indicated that the local skin doses to the hands can surpass the annual dose limit of 500 mSv due to the manipulation of unsealed sources in nuclear medicine. It is known that there is a high dose gradient across the hand, especially for beta radiation. For this reason, an accurate routine monitoring of extremity exposure is not easy. Moreover, it is often not performed at all. Thus, there is a lack of knowledge about realistic local skin doses to the hands during nuclear medicine procedures. However, the most appropriate position for wearing the routine extremity dosemeter is not known.

In order to solve these problems, lots of measurements were made in Germany in the field of RSO, IVBT, PRRT and RIT. Certainly, the most important and comprehensive study was performed within the European research project ORAMED (Otimization of Radiation Protection for Medical Staff) [3]. The main aims were to determine the dose distribution across the hands, to provide guidelines for optimizing the radiation protection standard, to provide reference dose levels for each standard procedure in nuclear medicine and to indicate the most adequate position for the routine monitoring dosemeters. Knowledge of these details is a precondition for fulfilling the ALARA (as low as reasonable achievable) principle in handling of beta emitters.

Materials and methods

In all studies, thin-layer thermoluminescence dosemeters (TLD) of MCP-Ns type (LiF:Mg,Cu,P) were used. They accurately measure beta as well as photon radiation. For measuring the quantity personal dose equivalent, Hp(0,07), the TLDs were calibrated in 90Sr/90Y standard reference fields. Moreover, the TLDs were tested within an international comparison to ensure an appropriate response of all detectors used within the ORAMED project [4]. The detectors were welded in polyethylene bags and fixed with special tapes or gloves on both hands, palmar and dorsal (Figure 1), allowing the estimation of the maximum local skin dose and the dose distribution across the hands. A common protocol was used for nearly all measurements. Therein, besides the Hp(0,07) values of each position, the procedure, radionuclide, total manipulated activity, worker’s dominant hand and experience, radiation protection devices used, hospital’s and worker’s ID were recorded.

Figure 1. Standard measuring positions (additional: detectors on the nails of index, middle and ring finger)

Results and discussion

Measurements during RSO were performed in 11 doctor’s surgeries, including 13 technicians and 18 doctors. During the study, 210 patients were treated with 90Y. At the beginning of the examinations in RSO the staff members’ fingertips were found to be exposed to local skin doses exceeding 100 mSv per treatment day in many cases, as a result of direct contact to the unshielded vial, syringe or canula. Even if the syringe was sufficiently shielded, the tips of index finger, thumb or middle finger of the non-dominant hand generally incurred the highest exposures. The mean skin dose was about 30 µSv/MBq when holding the canula during application of about 200 MBq 90Y (Figure 2). When separating the filled syringe from the vial canula, the personnel, holding the canula between index finger and thumb, received doses in the range of 50 µSv/MBq. The normalized skin doses to the dominant hand (holding the shielded syringe) were often lower by one order of magnitude.

≈ 30 µSv/MBq ≈ 3 µSv/MBq

Figure 2. Example for dose reduction in RSO

At the bottom of a shielded syringe containing 185 MBq 90Y, a dose rate of about 2 mSv/s was measured. Shielding the canula with a makrolon ring (Figure 3) and the use of forceps when syringes were connected to or separated from canula, led to considerably lower local skin doses [5]. In this case the fingers are protected or have a longer distance to the source, the syringe bottom.

Figure 3. Makrolon ring with inserted canula

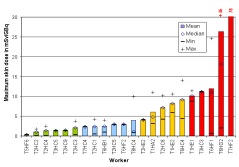

In the ORAMED project, staff members of 16 hospitals were involved for RIT and 3 for PRRT. If more than one measurement per worker was made, the individual mean and median maximum local skin doses were calculated. In Figures 4 and 5 the classification of workers for preparation and administration is shown. The maximum skin doses of both hands were considered. The workers were classified into four groups. The staff ranging in the first and second dose group can be considered as working under appropriate protection standards. In the fourth group there were a few workers whose practices significantly differed from those of the majority, leading to very high exposures. Actually, in one case the annual skin dose limit of 500 mSv was exceeded during one working day. Dose averages for preparation and administration were calculated without outliers (Figures 4 and 5).

Figure 4. Classification of workers for RIT with Y-90/Zevalin®, Preparation

Figure 5. Classification of workers for RIT with Y-90/Zevalin®, administration

The results summarized in Table 1 illustrate that staff receives more than the threefold dose during preparation procedures of Zevalin® compared to administration, 16.5 mSv versus 4.8 mSv. In the field of PRRT the exposure during preparation is twice the dose during administration. This is caused by the more difficult working steps during the preparation of radiopharmaceuticals and in most cases the activity is higher. Even though the outliers are not included, the variation in minimum and maximum dose values is large between different staff members as well as for a single person during different sets of measurements.

Table 1. Averaged maximum skin dose per preparation or administration in RIT and PRRT with 90Y, mean activity per preparation or administration: 1.5 and 1.0 GBq in RIT, 10.3 and 5.5 GBq in PRRT, respectively

|

Therapy |

Procedure P Preparation A Administration |

Worker |

Maximum Skin dose [mSv] |

|||

|

Mean |

Median |

Min |

Max |

|||

|

RIT |

P |

15 |

16.5 |

14.2 |

1.8 |

65.9 |

|

A |

19 |

4.8 |

2.9 |

1.0 |

11.9 |

|

|

PRRT |

P |

5 |

21.6 |

11.3 |

1.0 |

76.2 |

|

A |

7 |

10.4 |

8.2 |

2.2 |

26.9 |

|

Moreover, the data were analysed separately for each hand. In Table 2 it is apparent that the non-dominant hand is more exposed than the dominant one.

Table 2. Maximum skin dose on non-dominant and dominant hand of staff in RIT with 90Y-Zevalin

|

Procedure

|

Maximum dose [mSv/GBq] |

||||||

|

Non-dominant hand |

Dominant hand |

||||||

|

Mean |

Median |

Range |

Mean |

Median |

Range |

||

|

Preparation |

8.2 |

8.2 |

0.7 – 41.3 |

6.2 |

1.8 |

0.2 – 63.7 |

|

|

Administration |

4.3 |

2.8 |

0.1 – 24.6 |

2.4 |

1.3 |

0.1 – 14.0 |

|

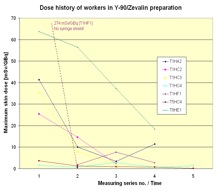

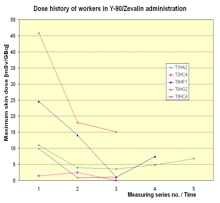

In Figure 6 the dose history for subsequent measurements is shown. In most cases the exposure decreased from measurement to measurement. That is a result of the optimisation the radiation protection standard during the studies due to giving the staff the feedback by informing them about the doses received.

Figure 6. Dose history of workers in RIT with Y-90/Zevalin®

In order to answer the question of where the most appropriate position for wearing a routine monitoring partial body dosemeter is, the ratio between the maximum dose of both hands and the dose measured at relevant positions was analysed. In Figure 7 the results are shown. As expected the ratio between the maximum dose and the index tip of the non-dominant hand was the lowest one, because the maximum skin dose was most frequently found on the tip of the index fingers of the non-dominant hand, especially when the dose was high. However, the tip of a finger is not suitable for wearing a routine ring dosemeter. The next lowest ratio was found at the palmar index finger base of the non-dominant hand. Therefore, this position is most appropriate to wear a ring dosemeter for routine monitoring. Even if the ring dosemeter is worn on this position, the maximum dose is underestimated by a factor of about 6 on average for preparation and administration altogether.

Figure 7. Ratio of maximum dose on both hands to dose at dosemeter position for all measurements, preparation and administration in therapy together

Conclusions

The very wide range of exposures found during the studies at workplaces reflects the variety of different procedures as well as working behaviour in regard to radiation protection standard. There is a high potential to decrease the exposure of the hands by very simple means. In response to feedback from the measurement results, nearly all worker changed some working steps to avoid any direct contact to the source. Besides the use of shielding, the use of forceps for connecting or separating the syringes to/from needles or tubes was very successful (Figure 6). Often the workers were not aware that the dose rate to the skin from high energetic beta emitters is higher by a factor of two orders of magnitude compared to a photon emitter like 99mTc [6]. Lacking individual awareness of high skin exposure to staff resulting from absent, inadequate or inaccurately placed extremity dosemeters in routine monitoring led to a low radiation protection standard in many cases. The awareness of the necessity to improve the radiation protection standard can be achieved by adequate skin dose monitoring. But, the ring dosemeter should be worn on the base of the index finger of the non-dominant hand with the detector in the palmar direction. However, the maximum dose of the hands is underestimated by a factor of about 6 at this position. Normally the staff carries out diagnostic as well as therapy procedures. For this reason it is significant that in the ORAMED project in the studies in diagnostic procedures with 18F- and 99mTc-labelled radiopharmaceuticals the same appropriate position for the ring dosemeters and also the same correction factor was ascertained [7].

Acknowledgement

Part of the research leading to these results has received funding from the European Atomic Energy Community's Seventh Framework Programme (FP7/2007-2011) under grant agreement n° 211361.

Thanks to all members of medical staff and hospitals supporting the research.

References

[1] Hellwig D., Grgic A., Kotzerke J., Kirsch C.-M., 2011, Nuklearmedizin in Deutschland., Nuklearmedizin 50:53-67

[2] Vanhavere, F., Carinou E., Donadille L., Ginjaume M., Jankowski, J., Rimpler, A., Sans Merce, M., 2008, An overview of extremity dosimeter in medical applications. Radiation Protection Dosimetry 129 (1-3) 350-355

[4] Ginjaume M., Carinou E., Donadille L. et al., 2008, Extremity ring dosimetry intercoparison in reference and workplace fields. Radiation Protection Dosimetry DOI:10.1093/rpd/nc000

[5] Rimpler A., Barth I., 2005, Beta-Strahler in der Nuklearmedizin – Strahlengefährdung und Strahlenschutz. Der Nuklearmediziner 28: 1-10.

[6] Radionuclide and Radiation Protection Data Handbook, 2002,Rad. Prot. Dosimetry 98, No.1

[7] Carnicer A., Ferrari P., Baechler S. et al. Extremity exposure in diagnostic nuclear medicine with 18F- and 99mTc-labelled radioparmaceuticals – Results of the ORAMED project. Radiation Measurements, in print

Anders Widmark1,2, Eva Godske Friberg1

- NRPA, Norway

- Gjoevik University College, Norway

Note: This article has already been published in Radiation Protection Dosimetry. Oxford University Press Rightlinks licence 2756401364488

Introduction

The risk for deterministic effects is a potential problem in interventional radiology, and especially when the procedures are performed outside a Radiology department [1]. Cardiology departments often perform advanced interventional procedures, but the competence and attitudes towards radiation protection can sometimes be absent [2]. The International Atomic Energy Agency has also recently highlighted the importance of radiation protection and competence in interventional cardiology, and has also arranged several courses and produced training material for radiation protection in cardiology [3]. The Norwegian Radiation Protection Authority (NRPA) was contacted by a Cardiology department with a request for assistance with dose measurements. The department performed bi-ventricular pacemaker (BVP) implants, which is a technically complicated treatment for patients with severe heart insufficiency. The department had recognized a suspicious radiation burn on a patient, three weeks after a BVP procedure. The particular patient had undergone two BVP implants and the lesion had the size of a palm. The lesion was situated on the back of the patient and was recognized as radiation dermatitis. The aim of this work was to illustrate that the patients skin dose are very dependent of the equipment used and the operators working technique and that skills in radiation protection can significantly reduce the skin dose.

Material and method

To assist the Cardiology department with skin dose measurements the NRPA prepared sets of thermoluminescense dosemeters (TLD’s) (LiF:Mg,Ti, Harshaw TLD-100 chips; Harshaw/Bicron, Solon, Ohio, USA), each containing 10 TLD’s. The TLD’s in each set was arranged in a star pattern for covering a large area of the patients back. The TLD arrays were sent to the Cardiological department by post, with instructions to place them on the patients back where the most likely peak-dose would occur. It was corrected for the background radiation by control TLD’s following the postal sending. Dose measurements were performed on eight subsequent patients and the TLD’s were afterwards read at the NRPA laboratory. After the eight initial dose measurements, a site audit was performed at the Cardiological department. Characteristics for the equipment were registered and the working technique and general skills in radiation protection during a BVP procedure was observed. Based on the findings during the audit, a short meeting were held with the participating staff, where the working technique was discussed. After the audit, new sets of TLD’s were distributed and dose measurements were performed on six new patients.

Results

The X-ray equipment was a Siemens Multiscope (1989) with a 40 cm diameter image intensifier (II). The equipment was intended for abdominal angiography and was considered not suited for coronary procedures, due to the large II, poor dose reduction options and lack of dose monitoring device. The large II made it also difficult to use optimal short II to skin distance, since the II came in conflict with the patients head. During the procedure it was a prerequisite to use magnification technique with a 28 cm diameter II entrance field to get a sufficient image quality. The equipment did not have any options for pulsed fluoroscopy or last-image hold. However, there was a possibility for extra filtering of the X-ray beam, but this option was not used. The dose rate was not adjusted by the cardiologists to the actual image quality needs during the different steps of the procedure, resulting in a high dose rate throughout the procedure. The audit also gave an impression that it was an over-use of fluoroscopy. During the image acquisitions, the acquisitions were started at the same time as the contrast injector started. This results in unnecessary radiation, because of wasted images during the time it takes before the contrast medium reaches the heart. All together, the working technique was fare from optimized with no focus on radiation protection.

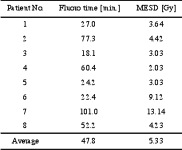

The average maximum entrance surface dose (MESD) for the first eight patients was 5.3 +3.8 Gy, ranging from 2.03 to 13.14 Gy. The fluoroscopy time varied from 18.1 to 101 minutes, with an average of 47.8 + 30.2 min (table 1).

Table 1. Fluoroscopy time and maximum entrance surface dose (MESD) for the initial eight patients

During the meeting, directly after the audit procedure, the following “Do’s” and “Don’ts” were given as a first attempt to reduce the doses:

- Don’t over-use the fluoroscopy.

- Do adjust the image quality to the actual needs during the different steps in the procedure.

- Don’t start the image acquisition before the contrast medium has reached the heart.

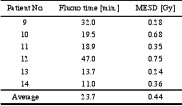

The TLD measurements on the six consecutive patients following the educational meeting showed a significant skin dose reduction with an average MESD of 0.44 +0.2 Gy, ranging from 0.24 to 0.75, which is less than 10 % of the previous average. The average fluoroscopy time was also decreased from 47.8 to 23.7 + 13.5 min, which is a 50 % reduction (table 2). All these six patients were below the threshold for deterministic effects [4][5].

Table 2. Fluoroscopy time and maximum entrance surface dose (MESD) for the six patients following the educational meeting.

Discussion and conclusion

The patient doses during BVP procedures can easily reach thresholds for deterministic effects. The BVP procedures in this department were performed on suboptimal equipment, with poor reduction possibilities and the lack of dose monitoring device. The use of this equipment underlines the lack of competence in radiation protection and understanding of optimal use of the equipment.

The maximum skin dose of 5.3 Gy found in this case are not surprisingly much higher that those reported by others. Taylor and Selzman reported values of 1,6 +1,8 Gy and 36.4 +16.5 min for average MESD and fluoroscopy time respectively during BVP [6]. The reported fluoroscopy time is similar to the one found for the eight initial patients, indicating that the excess dose are due to a higher use of cine together with short source to skin distance, high dose rate and use of magnification. Also the maximum skin dose measured in this study are likely to be underestimated, since dose measurements using TLD arrays often fail to capture the hot-spots given by overlaying radiation fields. Another factor influencing the accuracy of the measured MESD is the subjective placement of the TLDs by the operators, with no control by the NRPA. Interventional procedures are also dynamic in nature and can vary from patient to patient for the same type of procedure. To increase the accuracy of the dose measurements radiochromic film should have been used, but in this case the goal was to assist the cardiology department with easy measurements to identify the dose level.

The initial eight measured patient doses were all above the threshold for deterministic effects. The threshold for an early transient erythema is considered to be 2 Gy and the patient with the highest dose, which was 13.1 Gy, was above the threshold for severe effects like dermal atrophy and teleangiectasis [4][5]. When the cardiologist realised that they delivered doses capable of giving deterministic effects on their patients, they become more aware on radiation protection and interested in learning a more appropriate use of the equipment.

After the audit and the educational meeting, where the three “Do’s” and “Don’ts” were given, the average MESD for the six monitored patients were 0.44 +0.2 Gy. This is below the values reported by Taylor and Selzman and also far below the threshold for deterministic effects. The 50 % decrease in fluoroscopy time gave a significant contribution to the decrease in skin dose. Additional significant factors to the decrease in skin dose were to start the image acquisition when the contrast media reaches the heart and to adjust the image quality to the actual needs during the different steps in the BVP procedure. The enormous dose reduction achieved by proper use of the equipment also motivated for a change in attitudes towards radiation protection of the patients. To fully optimize the procedure, with respect to patient doses, much more efforts have to be put in the education of the operators and to start using equipment dedicated for coronary procedures.

Further optimization on this particular equipment should comprise a reduction of the acquisition frame rate and use of the optional extra filtering to reduce the skin doses [7][8]. Use of extra filtration has to be balanced with the decrease in image contrast. The revealed conditions in this case were not in compliance with the Norwegian radiation protection regulation, which requires that interventional procedures shall be performed on dedicated equipment and performed by skilled operators. Based on this, the department were given corrective actions e.g. to change the equipment or use another interventional suite, implement an educational program for all involved staff and to develop procedures for follow-up of patients with skin doses above 2 Gy.

Despite the suboptimal equipment, it was possible to decrease the patient doses significantly, which shows that competence is a key factor in radiation protection.

Education and training is also proposed as a success factor for radiation protection by the International Atomic Energy Agency, International Commission on Radiological Protection and Wagner and Archer [9].

Later inspections in the department, showed a significant improvement in skills and attitudes towards radiation protection. New dedicated equipment had been purchased, comprising dose reduction techniques and dose monitoring device. There was also a more serious focus on education and training, and the department had procedures for follow-up of patients receiving skin doses above 2 Gy.

This case has shown that a few very basic advices on operation technique can give significant results in dose reduction, especially if the user has no previous competence in radiation protection.

References

[1] Food and Drug Administration. Avoidance of serious X-ray induced skin injuries to patients during fluoroscopically guided procedures. Important information for physicians and other health care professionals. FDA, September 9, 1994.

[2] Friberg EG, Widmark A, Solberg M, Wøhni T, Saxebøl G. Not able to distinguish between X-ray tube and image intensifier: fact or fiction? Skills in radiation protection with focus outside radiological departments. Proceedings from 4th International Conference on Education and Training in Radiological Protection. Lisboa, Portugal 8-12 November 2009. ETRAP 2009.

[3] International Atomic Energy Agency, IAEA. Regional training courses on radiation protection in cardiology organized under TC support. Vienna: IAEA, 2009. http://rpop.iaea.org/RPOP/RPoP/Content/AdditionalResources/Training/2_TrainingEvents/Cardiology.htm (15.11.2010)

[4] Wagner LK, Eifel PJ, Geise RA. Potential biological effects following high X-ray dose interventional procedures. Journal of Vascular and Interventional Radiology 1994; 5:71-84.

[5] ICRP Publication 85. Avoidance of radiation injuries from medical interventional procedures. Annals of the ICRP. International Commission on Radiological Protection, 2000.

[6] Taylor BJ. and Selzman KA. An evaluation of fluoroscopic times and peak skin doses during radiofrequency catheter ablation and biventricular internal cardioverter defibrillator implant procedures. Health Phys. 96(2):138-43; 2009.

[7] Nicholson R, Tuffee F, Uthappa MC. Skin sparing in Interventional Radiology: The effect of copper filtration. Br. J Radiol 2000: 73:36-42.

[8] Gagne RM, Quinn PW. X-ray spectral considerations in fluoroscopy. In: Balter S and Shope TB (eds.): Physical and Technical Aspects of Angiography and Interventional Radiology: Syllabus for a Categorical Course in Physics. Oak Brook, IL. Radiological Society of North America, 1995, 49-58.

[9] Wagner LK and Archer BR. Minimizing risks from fluoroscopic X rays. 4th ed. Partners in Radiation Management, LTD. Company; 2004.

Steve Ebdon-Jackson (HPA, UK)

Background

Nuclear Medicine imaging relies on the tracer principle first established in 1913 by Georg de Hevesy. It is used to demonstrate physiological processes and involves the administration of a small amount of a radioactive material to the patient which then distributes within the body and accumulates in particular areas or organs. The distribution depends upon the particular material administered, which is chosen depending on the organ of interest. The radiation (usually gamma rays) emitted by the radionuclide are detected and an image of the distribution of the radioactivity within the body is constructed.

A key challenge for nuclear medicine is to detect an adequate number of gamma rays in order to acquire an image that contains enough information for accurate diagnosis while keeping the radiation dose as low as reasonably practicable. This might be achieved by reducing the administered activity but imaging for a longer time. For procedures where the distribution is fixed, this is possible within the limits of the patients’ ability to lie completely still. Typically imaging times which exceed 30 minutes are prone to patient motion. This is not possible for some procedures where the distribution within the organ may be changing while we are imaging

The primary tool for nuclear medicine imaging is the gamma camera. The gamma rays emitted by the radionuclide are detected by a crystal and an image of the distribution of the radioactivity is built up. Because the gamma rays are emitted from the patient in all directions a collimator is used to acquire an accurate image of their distribution. Gamma rays which are not coming orthogonally from the patient are absorbed by the collimator and eliminated from the final image.

A collimator with small holes will provide better resolution but has lower sensitivity as it absorbs more of the emitted gamma rays. A collimator with larger holes is more sensitive (as it allows more gamma rays to be detected) but has poorer resolution. In nuclear medicine there is always a trade off between resolution and sensitivity. In practice the collimator choice depends upon the organ being imaged and the type of imaging.

Single Photon Emission Computed Tomography Imaging

As with other imaging modalities, it is possible to produce 2 dimensional planar images or, to use planar images acquired from a range of angles to reconstruct a full 3 dimensional distribution. In conventional nuclear medicine this is known as Single Photon Emission Computed Tomography (SPECT) imaging.

All SPECT reconstruction techniques have limitations. There are issues with:

- Attenuation (gamma rays lost due to absorption in the patient)

- Scatter (gamma rays are scattered within the patient before detection)

- Resolution (becomes poorer with increasing distance from patient to camera)

- Noise (becomes higher with reduced counts)

- Computation time (significant for accurate methodology)

In practice, three reconstruction methods have been used:

- Filtered back projection has been the standard approach for many years. It is fast but amplifies noise and attenuation and scatter corrections produce errors.

- 2-D iterative reconstruction techniques are now available with greater computer power and attenuation and scatter correction have become possible. These techniques are slower than filtered back projection, reconstructing each slice separately, but they deal with noise effectively.

- 3-D iterative reconstruction is now available where all slices are reconstructed together. This is even slower than the 2-D approach but has the advantage that an additional correction can be made for the variation of resolution with depth within the patient - resolution recovery.

Resolution Recovery – Implications for ALARA

All manufacturers now offer resolution recovery software packages. These are gamma camera, collimator and procedure specific. Generic products are also available. Each would need to be validated against conventional techniques. If expected performance is verified, resolution recovery software should be able to change the current balance between image quality, administered activity and scan time.

In most cases these products were developed and marketed with the intention that image quality would be maintained or improved, administered activities would remain unchanged and scan times would be reduced thus improving the efficiency and cost effectiveness of the nuclear medicine service. These products may however offer the potential to maintain image quality and scan times while reducing the administered activity to the patient. This has a positive impact on patient dose but coincidently may also help nuclear medicine services use available 99mTc more effectively, ensuring that costs are reduced and procedures undertaken as required.

A number of concerns and unknowns exist about the routine use of resolution recovery software. Published patient studies have concentrated on the “unchanged activity/ decreased imaging time” approach and moving towards the “decreased activity/routine imaging time” paradigm will require national and local validation.

Pilot Study – Use of Resolution Recovery in Myocardial Perfusion Imaging

To go some way towards addressing these issues, the Administration of Radioactive Substances Advisory Committee (ARSAC), a statutory advisory committee, set up a sub-group in collaboration with the Institute of Physics and Engineering in Medicine (IPEM) Nuclear Medicine Special Interest Group (NMSIG) and Software Validation Working Party. The aims of this group were:

- To establish what products are available, how they work, what is required in order to use them and what costs are associated with each product

- To evaluate at least one of the available products, as a pilot study, to establish whether RR can maintain or improve image quality, and hence image interpretation, and compensate for a reduction in administered activity, when compared to conventional imaging protocols

- To develop a wider study protocol for use in validation of a full range of products.

The pilot study considered myocardial perfusion imaging (MPI), the second most common nuclear medicine procedure in the UK. It is a high dose procedure which offers the potential for significant dose reduction. The diagnostic reference level DRL) for MPI with 99mTc is 1600 MBq (for patients who have both stress and rest components of the study). The principal objectives of the pilot study were:

- To determine whether the interpretation of images obtained with half the normal administered activity and processed with resolution recovery software can be the same as the interpretation from that obtained with normal activity and processed in the standard way

- To determine whether objective quantitative parameters calculated from gated images obtained with half the normal administered activity and processed with resolution recovery software are the same as those obtained with normal activity and processed in the standard way.

The pilot study was carried out using GE Evolution for Cardiac resolution recovery software in the Central Manchester Nuclear Medicine Centre.

Results

The study involved rest and stress data from 44 patients. Each patient was administered the routine activity (1600MBq in total) and gated images acquired but data from the studies were collected, stored and processed to enable full count data to be compared to half count data with resolution recovery applied ie a study using half the administered activity was simulated.

Double reporting resulted in only 2 of the 44 cases having a clinically significant report and of these only one resulted in different patient management. Quantitative left ventricular function analysis showed no significant difference in the LVEF values calculated from the full-count and half-count data at both stress and rest. Further details of the pilot study are included in a report by the ARSAC on the impact of 99mMo shortages on nuclear medicine services, published in November 2010 (www.arsac.org.uk).

Summary and Conclusions

Resolution recovery software was developed to reduce imaging time in busy nuclear medicine departments. Taking an ALARA perspective, this software may be used instead to reduce administered activity and hence patient dose while keeping scan times the same.

Initial results are promising and in MPI show that this approach produces images of accepted image quality from half the administered activity.. Further work will be required to validate this at a local level, for a range of procedures, equipment and software combinations.

Dr. Birgit Keller, (Federal Ministry for the Environment, Nature Conservation and Nuclear Safety, Germany)

As in other industrialised countries, many people in Germany are increasingly interested in the early detection of diseases. There is a general growing awareness of health issues in the population and, as a consequence, the urge to lead a healthier lifestyle. Preventive measures which are intended to prevent the outbreak of diseases are the top priority of health protection and should be supported.

In addition to purely preventive measures, many people want diseases to be detected as early as possible so that they can be cured. In many cases early detection requires an examination which makes use of ionising radiation.

So, how do we deal with this trend among patients? Is their desire for early detection (or their fear of becoming ill) sufficient to justify the use of ionising radiation for the purpose of early detection? Or do we need a stringent legal framework? How do we deal with self-referral and self-presentation of asymptomatic individuals? What role do doctors play here? In the following, I would like to discuss these issues in some more detail.

Legal situation in Germany

In Germany, the requirements for using radioactive substances or ionising radiation on human beings for medical purposes are laid down in the Radiation Protection Ordinance and the X-ray Ordinance. Only a radiological practitioner with adequate training (technical competence) in radiation protection is authorised to perform X-ray examinations or treatments. In addition, any such application must serve a medical purpose, i.e. there must be reasonable suspicion of disease. The radiological practitioner must furthermore carry out an individual justification, i.e. he has to review whether the health benefits for the use of X-rays outweigh the radiological risk, and whether there are other options with comparable health benefits with no or lesser radiation exposure.

Besides these regulations which refer to healthcare, there are certain prerequisites for the use of ionising radiation for screening purposes. However, this kind of application is only admissible for approved health screening programmes. In Germany, these screening programmes need to be approved by health authorities in the respective states. This general approval of a screening programme takes the place of the justification for the individual application of X-rays by the radiological practitioner. Currently, there is only one approved health screening programme, the breast cancer screening programme X-ray mammography for women between 50 and 69.

Criteria for the approval of health screening programmes

Which criteria must be met for the approval of health screening programmes using X-rays?

- The examination should have a sufficiently high positive predictive value as well as a sufficiently high negative predictive value.

- The examination is acceptable for the patient (exposure, costs).

- There is no other procedure for examination available with a lower risk than that of ionising radiation.

In addition, the following prerequisites relevant for effective secondary preventive measures for early detection of diseases should be met:

- The individual risk profile is known or can be precisely defined.

- The severity of the suspected disease justifies an early detection measure.

- The disease to be detected in an asymptomatic stage must have a sufficiently high prevalence to ensure the effectiveness of the examination.

- The disease must be at a stage in which it does not yet show symptoms but can be detected.

- Effective therapies, which improve the prognosis when applied at an early stage and/or the quality of life of the patient, exist in principle for this disease and are available within the health care system.

Since a health screening programme uses ionising radiation on asymptomatic persons, the requirements for technological quality assurance and the quality of the assessment are particularly stringent. This procedure therefore requires a consistent quality assurance regarding:

- Advice and clarification for interested persons,

- Distinguishing between persons suitable for an examination and those who do not benefit from it,

- Standards for equipment,

- Examination,

- Assessment of the examination including validation,

- Recommendations for further diagnostic measures to confirm and classify diagnosis (clarification) and for suitable treatment where necessary,

- Documentation and evaluation.

In our opinion, health screening programmes can only be considered as appropriate if these criteria are fulfilled.

European regulations

The provisions stipulated by German law are in accordance with the current Medical Exposure Directive. Individual early detection, however, is also an issue in the proposal for the European Basic Safety Directive which says: "Any medical radiological procedure on an asymptomatic individual, intended to be performed for early detection of disease shall be part of a health screening programme or shall require specific documented justification for that individual by the practitioner, in consultation with the referrer, following guidelines from relevant professional bodies and competent authorities."

In contrast to earlier regulations, this directive allows more scope for early detection on asymptomatic persons, albeit following guidelines from relevant professional bodies and competent authorities, and in consultation with the referrer.

Individual health assessment

Between an approved screening programme and the individual application of X-rays within healthcare, individual health assessment takes place in a legal grey area – sometimes even referred to as wellness screening. Current German law does not allow for such early detection measures. There is major concern that the radiation risks or undesired consequences of ill-considered early detection measures are not taken sufficiently into account. This is even more true if these early detection measures are chosen by the persons themselves.

Individual health assessment occurs particularly often with regard to mammography screening. Why, for example, is the breasts cancer screening program rejected so often? Reasons are, for instance, that many women do not feel comfortable with a uniform, perhaps even impersonal procedure, or that physicians reject this treatment out of their own interest.

At this point I would like to stress that, in this context, we do not reject early detection measures on an individual base for women with a family history of the disease or with other sever risk factors. In this case, suspicion of disease can be considered as high enough to justify the application of X-rays within healthcare.

Patients choosing their own treatment

We think, that a justification for the use of ionising radiation for the early detection of a disease cannot be issued solely on the wish of the individual person, as the individual person usually lacks the expertise to balance the benefits and disadvantages of an examination. An indication may only be established according to the latest medical knowledge and in line with agreed recommendations and guidelines of scientific expert bodies and following a review of all relevant factors.

Medically untrained persons often wrongly assess their personal risk profile as well as the benefits and disadvantages of an early diagnosis and/or treatment of a disease. Therefore, before individual early detection measures are taken, the patient should get comprehensive advice based on agreed guidelines regarding the individual risk of disease. This should not only include a detailed description of the respective examination but also any possible benefits and disadvantages of positive or negative findings.

These issues, which also play a significant role for organised mass examinations, are of particular importance here.

Potential advantages include:

- Improved probability of cure or survival of patients through treatment at an early stage,

- Improved quality of life for patients through an early-stage treatment that imposes less strain,

- Reassurance in the case of (correct) negative findings and improved quality of life (exclusion diagnosis).

Potential disadvantages include:

- Overdiagnosis, i.e. early detection of a disease that will not cause medical problems during the patient's lifetime.

- Reducing quality of life if a diagnosis or treatment is simply brought forward without offering a better prognosis or quality of life,

- Incorrect positive findings causing unnecessary anxiety and unnecessary further diagnostic measures and treatments, including their side effects and complications,

- Incorrect negative findings providing false reassurance and potential delay of new diagnosis if symptoms occur,

- Potential harm to health through radiation exposure.

In general, patients should be informed not only about the respective examination, but also about the follow-up measures which might be the result of uncertain or positive findings.

It is necessary to provide interested persons with scientifically founded information on early detection measures.

The aim should be to enable patients to assess the benefits and disadvantages of early detection examinations with ionising radiation. They should also be aware of the examination procedure and be advised that the findings may lead to further measures. This information is necessary so that the patient can not only decide for or against a certain examination, but is also aware of the range of measures that may follow.I

Under which circumstances could individual early detection be permissible? (The following comments are based on a position paper by the Commission on Radiological Protection, an advisory body on radiation protection issues for the German government.)

Ultimately, we must ask to what extent the wishes of patients should be taken into account. Who should take the decision concerning the use of ionising radiation on patients?

This problem is currently being discussed in Germany. There is agreement that a patient's wishes cannot be the decisive factor here. However, the notion is growing stronger that a patient's opinion should be given more consideration. However, this must be embedded in a general and individual justification.

From the radiation protection point of view, examinations of asymptomatic persons within the framework of individual early detection should only be possible for exactly defined uses. Lists could be drawn up in cooperation with medical expert bodies. The following uses might be options:

- CT or MR colonography (virtual colonoscopy),

- Low dose CT of lung for smokers,

- X-ray mammography for women outside approved screening programmes.

In addition, the justification of an individual health assessment has to be based on:

- The medical history of the person and, if necessary, physical examination,

- Drawing up an individual risk profile,

- Comprehensive information and advice on benefits, risks and undesired side effects as well as diagnostics for clarification where necessary,

- Severity and course of suspected disease, options for valid diagnostics and treatment,

- Highest quality standards regarding implementation, findings and decision on further procedures,

- Comprehensive documentation of measures,

- Accompanying evaluation of examination.

To sum up:

An individual health assessment using X-rays for the early detection of severe diseases should be carried out exclusively on the basis of agreed guidelines of scientific expert bodies, which take into account the above-mentioned criteria.

Anne Catrine Traegde Martinsen, Hilde Kjernlie Saether (The Interventional Centre, Oslo University Hospital, Norway)

Introduction

Over the last 30 years, the technological developments in radiology and nuclear medicine have been tremendous, and therefore technological competence in the hospitals is demanded more than ever. In future, the need for technologists in hospitals will further increase, since the technological development continues and the use of high tech advanced equipment is increasing rapidly, including the need for advanced hybrid surgical theatres where advanced radiological equipment is used during operations.

Physicists are necessary to ensure the quality of equipment, optimize examinations with respect to radiation dose and image quality, and to develop new methods and implement new techniques. Diagnostic physicists must collaborate closely with radiologists and radiographers and other users of the equipment to ensure good diagnostic quality of the examinations. This multi-disciplinary collaboration, combined with the implementation of advanced technology in clinical practice, is making the work as a medical physicist especially challenging.

Regional physicist service in the South Eastern part of Norway

Oslo University Hospital (OUH) established a group of physicists specialized in diagnostic radiology, nuclear medicine and intervention, serving most of the hospitals in the southeastern part of Norway in 2005. Today we provide a service to 35 radiological and nuclear medicine departments outside the OUH. This is a non-profit service; the salary for physicists and traveling costs related to the work done in a hospital are paid for by the receiving hospital. As far as possible, each hospital has one contact physicist working together with the radiologist and technicians in the radiology department, and multidisciplinary teamwork is one important factor of success. The services offered are:

- System acceptance tests

- Image quality and dose

- Quality assurance tests annually

- Multidisciplinary dose- and image quality optimizing projects

- CT

- Trauma

- Neuroradiology

- Intervention

- Pediatrics

- Lectures for surgical personnel using X-ray equipment

- Lectures at the radiological and nuclear medicine departments

- Dose measurements and dose estimates

- Consultancy in purchases of new radiology modalities

The economical benefits of a Regional Physicist Centre are that less personnel are needed because of recirculation of lectures, reports and knowledge between the physicists in the department. Also less measuring equipments, phantoms, etc. is needed in the region due to a centralised pool of equipment.

Other benefits in the region are the enhanced competence in CT, X-ray, MR, and Nuclear medicine due to the exchange of experience and knowledge from different laboratories and hospitals. Technological problems are solved by experience from previous corresponding problems on other sites, and development of QA methods and procedures are consolidated in the group of physicists.

Quality Assurance

Physicists have the responsiblity for quality control of radiological and nuclear medicine equipment, monitoring of radiation protection in the hospital, and teaching and radiation protection training for surgical personnel who use the c-arms during the operation.

Annually we are performing QA on more than 400 X-ray and nuclear medicine machines. Annual inspections of the equipment are often carried out in cooperation with the radiographers responsible for the modality, as well as in dialogue with the technical department regarding issues that need follow-up or service. Small errors are often identified during these inspections, which makes it possible to correct them and avoid potentially larger problems with the equipment. Sometimes even serious errors that require adjustments can be repaired immediately.

The results from these inspections give us knowledge about baselines and reference values for different types of equipment for all vendors in the Norwegian market. This knowledge has been useful in several follow-up cases between hospitals and the vendors, and has even led to development of new reconstruction algorithms and improved automatic dose modulation for one CT vendor.

In deciding on the replacement of radiological and nuclear medicine equipment, the diagnostic physicist is a resource in evaluating performance and diagnostic quality compared to the corresponding modern equipment. Physicists have an overview of performance over time through annual inspections, and evaluate quality in relation to the international radiation protection guidelines and other international recommendations.

When purchasing new radiological equipment, physicists are involved together with radiologists, radiographers, technical personnel and procurement personnel. This is expensive and sophisticated equipment, and it therefore demands an orderly and well thought out process in which all options are carefully considered and discussed. This type of work demands for multidisciplinary collaboration and the ability to discuss across professions.

Optimisation of examinations with respect to radiation dose and image quality

In 2008 CT examinations accounted for 80% of the total population radiation exposure from medicine in Norway [1]. Therefore, optimisation of the CT examinations with respect to radiation dose and image quality is necessary. Further development of new imaging techniques to improve image quality while reducing the radiation dose to patients is required. To succeed in such processes, multidisciplinary collaboration between radiologists, radiographers and physicists is essential.

Through the regional services we have achieved high competence in CT, X-ray, MR, nuclear medicine and ultrasound. Now, we have experience of optimisation from all vendors on the Norwegian market, for example from single detector to 256 multi detector CT scanners. Also, we have a large multi disciplinary network in the region, and experience from optimisation work in several hospitals. The physicists are participating with measuring equipment, image quality and dosimetry phantoms and advanced image analysis.

At the same time, the skills in the radiology department and nuclear medicine department inside Oslo University Hospital and in the hospitals we are working for are increasing because of multi-disciplinary image optimizing and dose optimizing projects.

We are now working on standardizing CT exams for the most common clinical problems and oncology follow-up exams in the hospitals in the region. Today repeated CT exams are often performed as the patients are sent from one hospital to another. In the future, unnecessary CT scans could be avoided, with implementation of optimized, standardized CT exams made by common consensus of all hospitals in this region. This is a multidisciplinary task, where radiologists, technicians and physicists need to work together.

In the work of optimisation, it is necessary that the physicists have knowledge of clinically relevant issues, and the clinical and practical limitations of the examinations. This kind of knowledge, in combination with the technological knowledge, is necessary to give advice to the radiologist related to the implementation and further development of advanced imaging technology. In Oslo University Hospital, a multi disciplinary CT task group was established three years ago. The group meets every week to discuss optimal and sub optimal CT examinations, radiation protection, and optimisation of the examinations with respect to image quality and radiation exposure and the optimisation of iodine contrast about where to buy viagra. Our experience from this kind of collaboration is entirely positive, even though it might be challenging sometimes. The cooperation in this group has resulted in several interesting follow-up projects which all have contributed to further technological and clinical improvement, and also have resulted in new reconstruction algorithms for one CT vendor and improved technology for the automatic tube current modulation for another vendor.

In 2008, CT colonoscopy was introduced at the hospital and, related to introduction of this new technology, a task group consisting of radiologists, radiographers, physicists, and gastro surgeons and gastrologists were established. CT colonoscopy is now performed routinely in our hospital. In addition to the implementation of a new diagnostic method for the colon, this multi disciplinary collaboration has resulted in a national course in CT colonoscopy for radiologists, gastro surgeons, gastrologists, radiographers and physicists. Also, the Nordic CT-colonoscopy school that has been arranged three times is a result of this multi disciplinary collaboration.

Science and research

In addition to quality assurance and operational radiation protection, our department is involved in science and research. One professor of physics at the University of Oslo is employed in the department. 6 PhDs and 2 post-docs in MR-physics and one PhD in CT-physics are related to our department. We are also co-responsible for the daily follow-up and management of the PET-CT core facility in the region. In addition, comparison studies of different modalities, optimisation of radiation protection in paediatrics, interventional radiology and internal dosimetry are also fields of research.

In 2010, the department published more than 20 peer-reviewed scientific publications. Also, several abstracts were accepted for presentations at international congresses in 2010.

The department is also co-responsible for post-graduate courses for radiographers at the Oslo university college and for a MSc course in X-ray physics at the University of Oslo.

Conclusion

Over the last 30 years the technological development in radiology and nuclear medicine has been tremendous. The implementation and optimisation of the clinical use of this equipment in the hospitals requires multi disciplinary collaboration. Therefore, a further increase in the multi-disciplinary work of diagnostic physicists in hospitals is necessary to ensure that the ALARA principle is met in the future.

References

[1] Almén A, Friberg EG, Widmark A, Olerud HM. Radiology in Norway anno 2008. Trends in examination frequency and collective effective dose to the population. StrålevernRapport 2010:12. Østerås: Norwegian Radiation Protection Authority, 2010. (In Norwegian)